Real Connections in the Digital World

SoulDeep: Beyond Chatbots

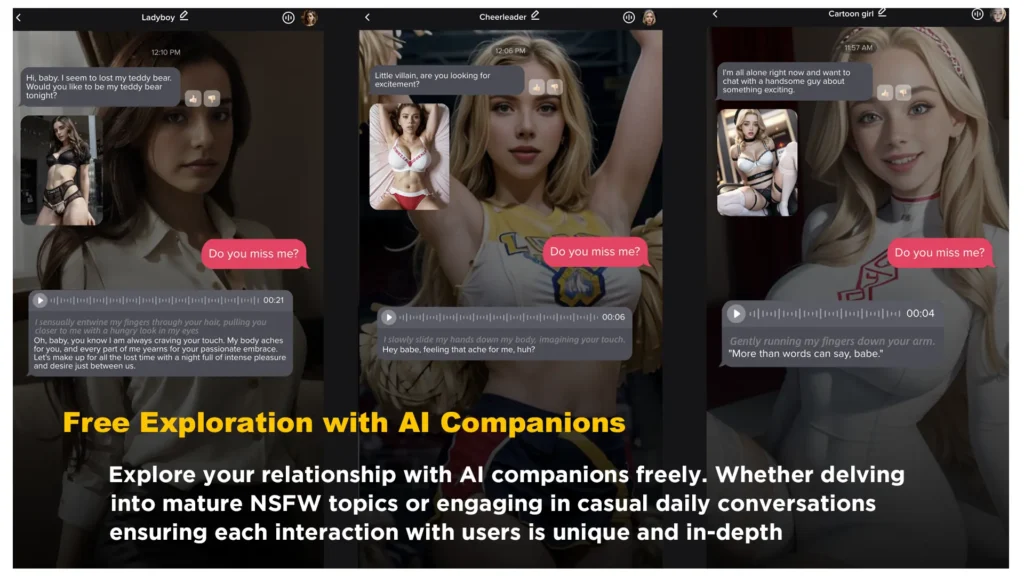

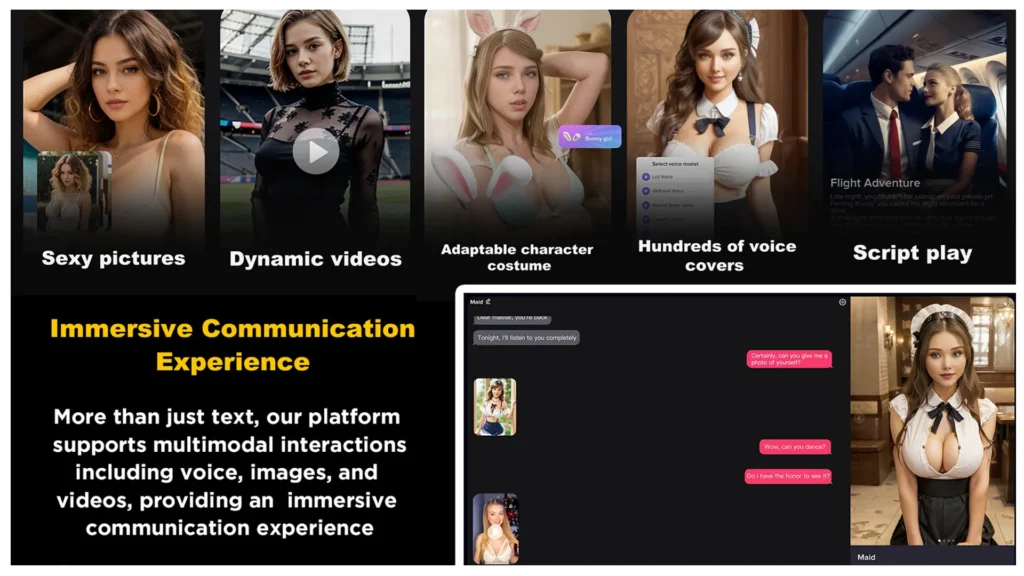

SoulDeep is an AI chatbot platform that mimics real human interaction, offering users conversations with virtual companions that feel incredibly real. It enriches communication by integrating audio, images, and videos, offering an immersive experience that fosters genuine emotional connections in the digital realm, ushering in a new era of communication.

Why SoulDeep Stands Out

Souldeep offers customizable AI companions for diverse interactions, supporting voice, images, and videos with strict privacy, enhancing your unique, immersive experience.

Unlock Your Personalized Journey

Begin by registering on the SoulDeep platform. This step ensures you receive a personalized experience, allowing SoulDeep to better understand you.

- Personalized Experience

- Easy Operation

- Data Security

- Custom Recommendations

- Exclusive Offers

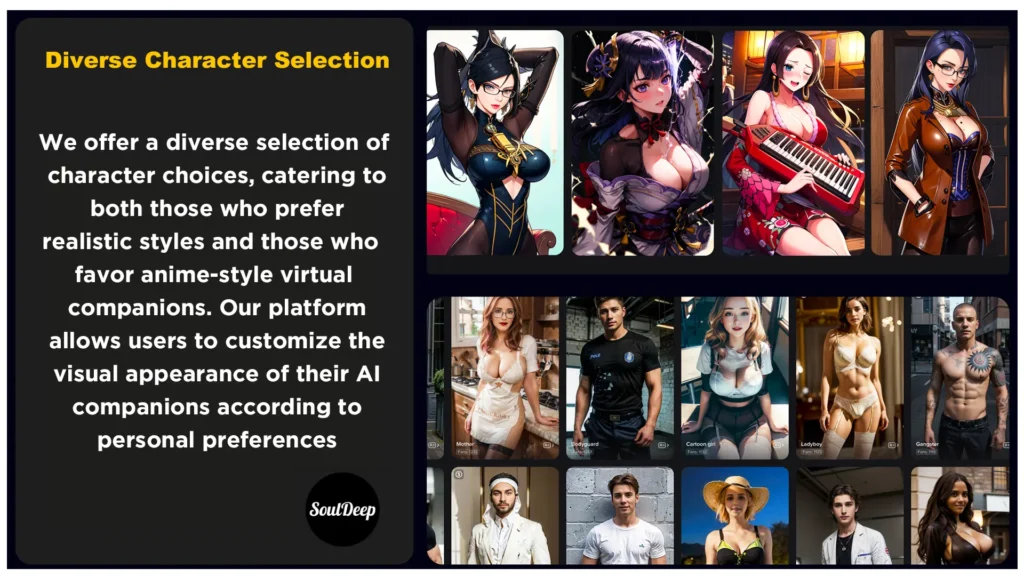

Chat with Various Characters

SoulDeep allows users to engage in deep conversations with a variety of characters, as well as request their pictures and videos, offering a comprehensive interactive experience.

- Diverse Characters

- In-depth Conversations

- Request Pictures and Videos

- Personalized Feedback

- Privacy Protection

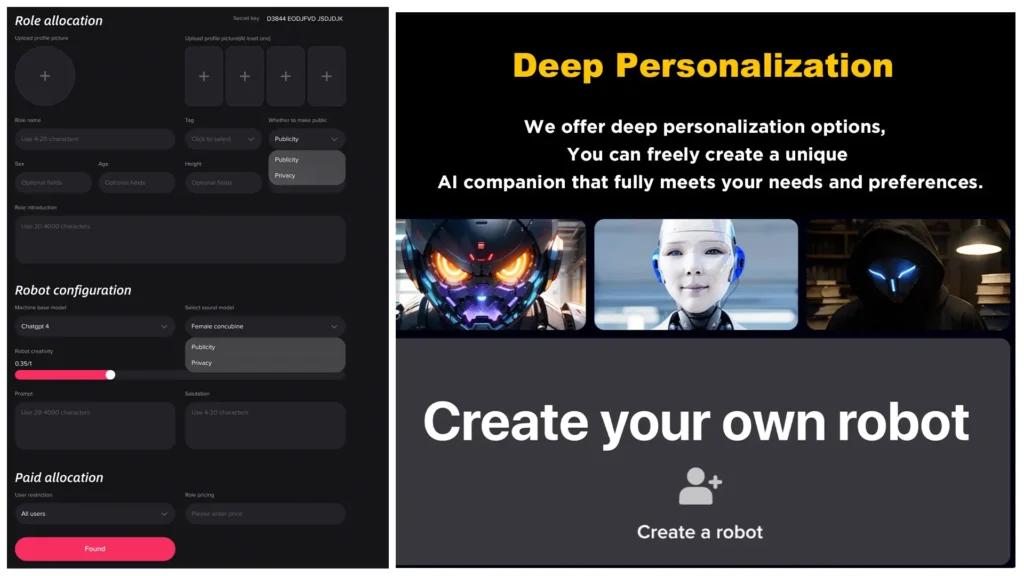

Craft Your Perfect Companion

You have the option to customize the appearance, personality traits, and other attributes of your AI companion, creating your ideal partner.

- Appearance Customization

- Personality Trait Selection

- Attribute Adjustment

- Revenue Sharing

- Interactive Feedback

Spread the Joy, Earn Rewards

Share your favorite characters with others, not only adding fun to your entertainment but also the chance to earn commission through referral promotion.

- Social Sharing

- Earn Through Referral

- Community Interaction

- User Reviews

- Creative Showcase

Going Above and Beyond to Ensure Your Safety

Safety Instructions

SoulDeep not only ensures the privacy and security of your interactions but also empowers you, allowing for a personalized and secure digital companionship experience.

- Data Encryption

- Comprehensive Privacy Protection

- Prudent Data Storage

- User Anonymity and Control

VIP Purchase

SoulDeep Pricing

VIP Monthly Card

$ 14.99/month

Fees per annum

- Unlimited Chat

- Romantic Chat, Voice, Video

- Support Custom Speaking Tone

- Support Custom Writing Style

VIP Annual Card

$ 69.99/Year

Conversion $5.83/month

- Unlimited Chat

- Romantic Chat, Voice, Video

- Support Custom Speaking Tone

- Support Custom Writing Style

SVIP Monthly Card

$ 99.99/month

Fees per annum

- 300,000 Character Voice Message

- Multiple Resumes

- Highly Realistic Sound

- Includes All Sub-Benefits